Project Teams

Blossom VR

Team Lead: Mehar Samra

Blossom VR is a game that aims to creatively enhance meditation and relaxation experiences in VR. Inspired by the effectiveness of biofeedback treatments, we developed a minimal viable product that utilizes movements in VR and Apple Watch biosensor data to provide feedback on how well the user is performing a relaxation technique, and their improvement over time. So far, we have built mindfulness minigames like harmonic breathing, movement meditation, taichi, and heart-rate biofeedback mechanisms, so there will be a relaxing experience for everyone. Through these minigames, players can also level up characters and gain collectables. Overall, Blossom VR is a relaxing world that for players to fade out the stress of life and reconnect with their inner selves.

Looking for 3d modelers.

ISAACS (Research) - Immersive Semi-Autonomous Aerial Command System

Team Leads: Archit Das, Harris Thai

ISAACS is an undergraduate-led research group within the Center for Augmented Cognition of the FHL Vive Center for Enhanced Reality. Our research is in human-UAV interaction, with a focus on teleoperation, telesensing, and multi-agent interaction.

We are also collaborating with the Lawrence Berkeley National Laboratory to perform 3D reconstruction of the environment via state-of-the-art methods in radiation detection. Our vision is to create a scalable open source platform for Beyond Line of Sight Flight compatible with any UAV or sensor suite.

TutoriVR

Team Lead: Daniel He

Virtual Reality painting is a form of 3D-painting done in a Virtual Reality (VR) space. Being a relatively new kind of art form, there is a growing interest within the creative practices community to learn it. Currently, most users learn using community posted 2D-videos on the internet, which are a screencast recording of the painting process by an instructor. While such an approach may suffice for teaching 2D-software tools, these videos by themselves fail in delivering crucial details that required by the user to understand actions in a VR space. We are building a robust and deployable VR-embedded tutorial system that supplements video tutorials with 3D and contextual aids directly in the user's VR environment, based on the TutoriVR research study.

Looking for designers.

Virtual Bauer Wurster

Virtual Bauer Wurster (VBW) is an app that allows students to edit and publish their architectural models. VBW enables users to share and explore 3D environments together.

This project is from the XR Lab - a research lab within the College of Environmental Design, with a goal to develop innovative, impactful research and applications in VR/AR/MR.

Expected experience in Unity XR.

Special Agent

Team Lead: Yaoxing Yi

Originally a final project of the Extended Reality DeCal, now we want to: (1) Make it a real video game; (2) Port it to multiple platforms (PC/Mac/Consoles).

Special Agent is a First-Person-Shooter survival horror action adventure game. In this video game, you will become Special Agent [name redacted] to complete a series of spectacular, adventurous missions in order to expose the mastermind's behind-the-scene, ultimate conspiracy! You will become a hero to save this hopeless world! You know what you're up against? Zombies, assassins, traps, spies and the armed-to-the-teeth military that hunt you down! It's time to do something to change this world! Join us! Join this epic conquest!

Our video game was developed in Unity, which means you need to have access to a laptop or desktop computer that can run Unity. We also expect your computers are able to run modeling software like Maya and Blender because we make 3D animations!

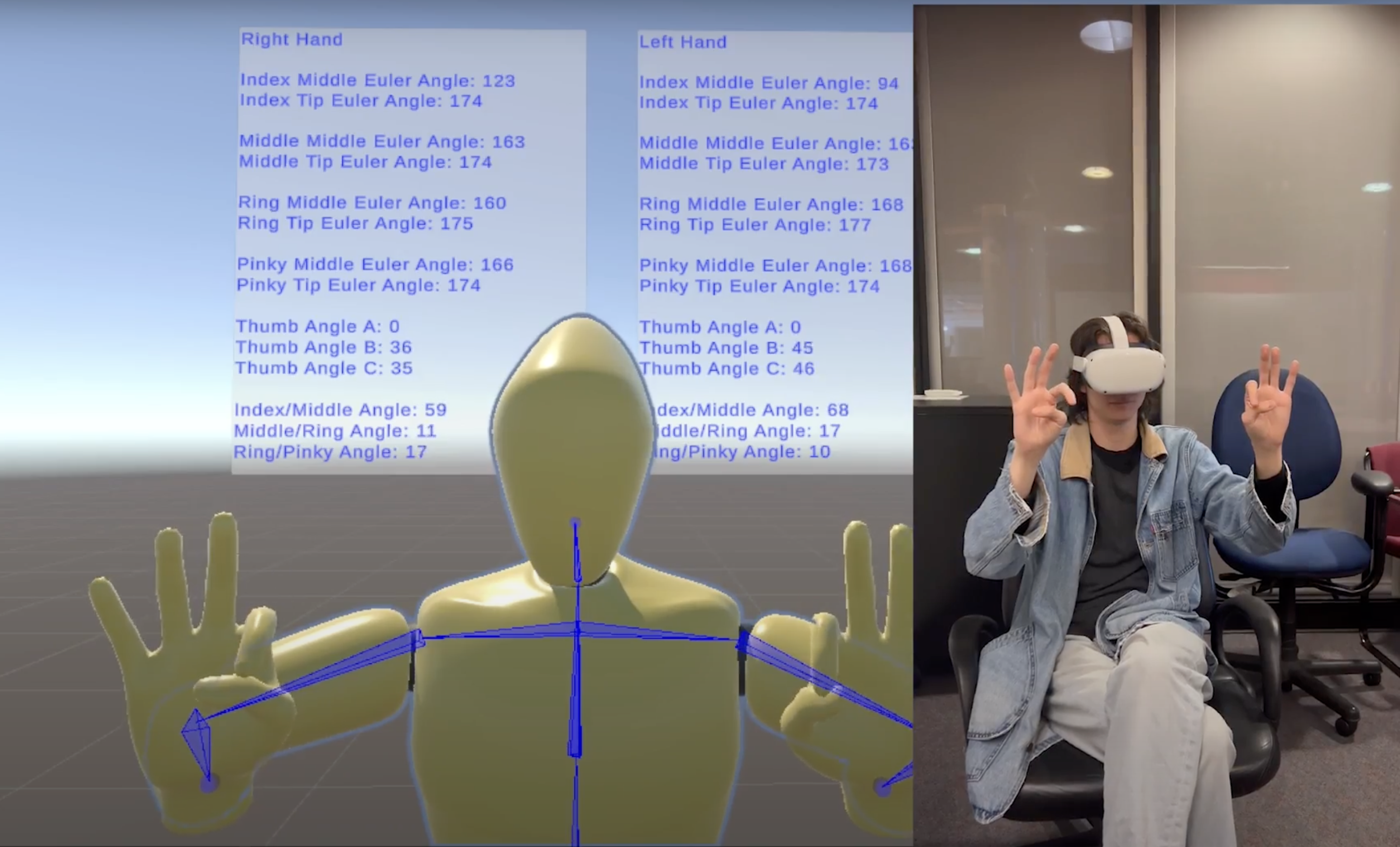

HandIF - Hand Interaction Framework

Team Lead: Alexander Angulo Rios

The HandIF team looks to create both the hardware and software solution for a fully immersive hand-interaction experience in VR, allowing the grabbing, manipulation, and use of elements in a virtual environment, paired with haptic gloves to simulate touch. Currently, we are working on the software behind the framework to create an accurate and realistic hand tracking experience that can be used in training scenarios, simulations, and other environments where precise and robust hand-tracking is necessary. We will work to create and integrate the hardware as well, creating a complete hand-interaction system with maximum immersion.

OpenArk (Research)

OpenARK is an open-source wearable augmented reality (AR) system founded at UC Berkeley in 2016. The C++ based software offers innovative core functionalities to power a wide range of off-the-shelf AR components, including see-through glasses, depth cameras, and IMUs.

OpenARK is a open-sourced Augmented Reality SDK that will allow you to rapidly prototype AR applications.

Expected computer vision experience.

ROAR (Research)

ROAR stands for Robot Open Autonomous Racing, and it is the FHL Vive Center for Enhanced Reality's autonomous driving research group.

Our goal is to advance XR and AI technologies used in vehicles, through a fun intercollegiate driving competition at the heart of the iconic Berkeley campus.

Expected hardware experience.

TacticalMR (Research)

Team Leads: Edward Kim, Daniel He

The vision of this research is to train humans by augmenting their experience in virtual reality to enhance their skills, which are related to dynamic tactical coordinations or interactions with other dynamic entities. To augment one's experience in VR, the content of situations, or scenarios, one experience in VR is crucial. Hence, to achieve our vision, we aim to develop an algorithm which procedurally generates a customized curriculum, or a sequence, of training scenarios (which are displayed in VR) according to a trainee's learning progression.

This algorithm entails following elements: (a) modeling and generating realistic behaviors of environment agents in VR, (b) modeling and tracing the trainee's knowledge and learning progression, and (c) adaptive synthesis of training scenarios in accordance with the trainee's learning progression.

The training technique we develop using VR could potentially help people in various application domains. For example, as factories are automated, we can potentially train factory workers to safely coordinate with various robots in VR, prior to physically interacting with them. We anticipate also training a group of officers for rescue missions amid natural disasters in VR, prior to deployment where mistakes or inexperience may lead to casualties. We also foresee our technology to help enhance sports players to coordinate and teamwork better.

For the scope of our project, we are focusing on training people to enhance their skills in an esport called EchoArena Oculus virtual reality game. Our goal is to train people to enhance their (1) situational awareness, (2) tactical decision making skills, and (3) execution of the decision to master EchoArena.

Expected experience in Unity XR and/or 3d modeling.

RehabVR (Research)

Team Leads: Edward Kim, Alton Sturgis, James Hu

We currently lack methods to reliably generate training experiences for cases such as the physical rehabilitation of stroke patients. Over the years, advances in virtual and augmented reality (VR/AR) have considerably reduced the cost of augmenting experience.

Using a similar algorithm and methodology to the previously mentioned project in "Training Humans in VR for Sports," we are able to help with the rehabilitation progress of stroke patients using VR/AR, where we synthesize scenarios with tasks that are incrementally difficult for patients, personalized to each of their physical limiations.

This research is in collaboration with Stanford Medical School (Neurology Dept.)

Expected experience in Unity XR and/or 3d modeling.